37 Teams Selected to Join Cohort 2 of the SDG Blockchain Accelerator

The SDG Blockchain Accelerator has concluded its 2025 virtual global hackathon sprint with 37 winning teams selected to join Cohort 2. Over the next four […]

Following the Istanbul Innovation Days held in March 2025, we are releasing a series of articles capturing key insights, bold ideas and solutions that emerged from the conversations on institutional innovation. From reimagining partnerships to leveraging frontier technologies, these stories showcase opportunities to rethink and transform institutional paradigms.

We all know that our institutions are too siloed, too analogue, and too inflexible for the polycrisis we face. What’s more, they struggle with challenges that don’t fit neatly into existing ministries and agencies. In the age of AI, if we’re serious about building and redesigning institutions, we need to understand how AI will allow us to reshape institutional capabilities in government. Given recent advances in development and adoption, the question isn’t whether to integrate AI, but how to do so while preserving human agency and democratic values. This is what our workshop on AI agents at this year’s Istanbul Innovation Days was all about.

The transformation ahead is profound. The Global Government Technology Centre describes it as the “Agentic State“: a shift in governance as fundamental as the invention of the bureaucratic state in the 19th century. Where bureaucracy brought standardization and process, the agentic state will, according to the authors, be driven by outcomes, not process; customized and personalized, not consistent and formulaic; real-time, not predictable.

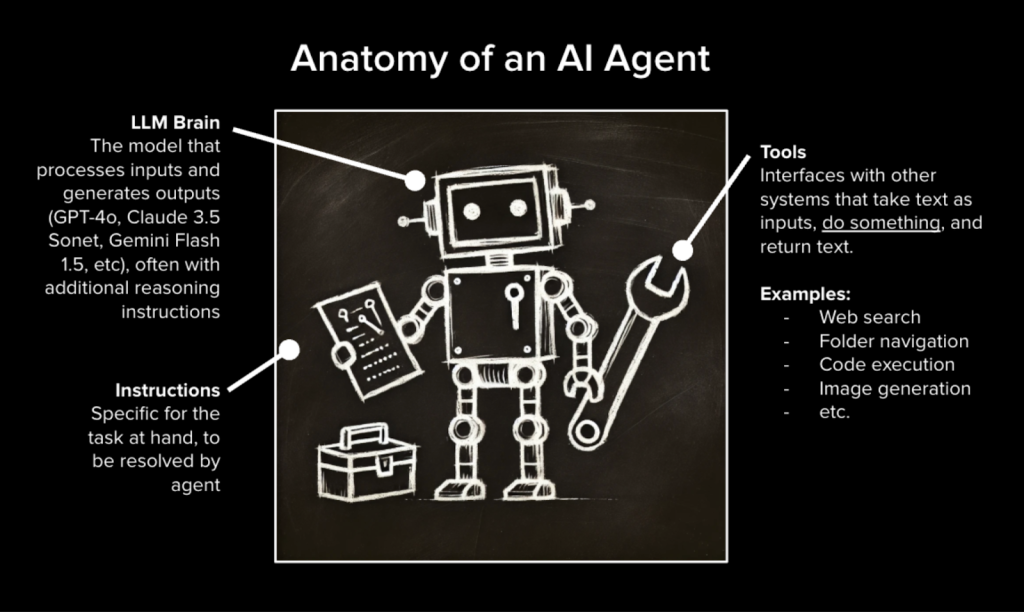

| What are AI agents? AI agents are autonomous systems that can execute complex tasks based on natural language instructions. Unlike traditional software that follows fixed pathways, agents autonomously select and combine actions to achieve goals. They combine a Large Language Model “brain” with real-world tools, enabling them to read documents, analyze data, and complete multi-step processes independently. |

Our hands-on workshop began with a live demonstration of current agentic AI capabilities. We showed how an AI agent would perform on a typical bureaucratic task: reviewing candidate CVs against job requirements. With just basic file operations, the agent independently read (mock) applications, compared qualifications, and produced structured summaries. This work typically consumes hours of human effort. And this is not just about simple automation, but intelligent task completion that understands context and adapts to variations.

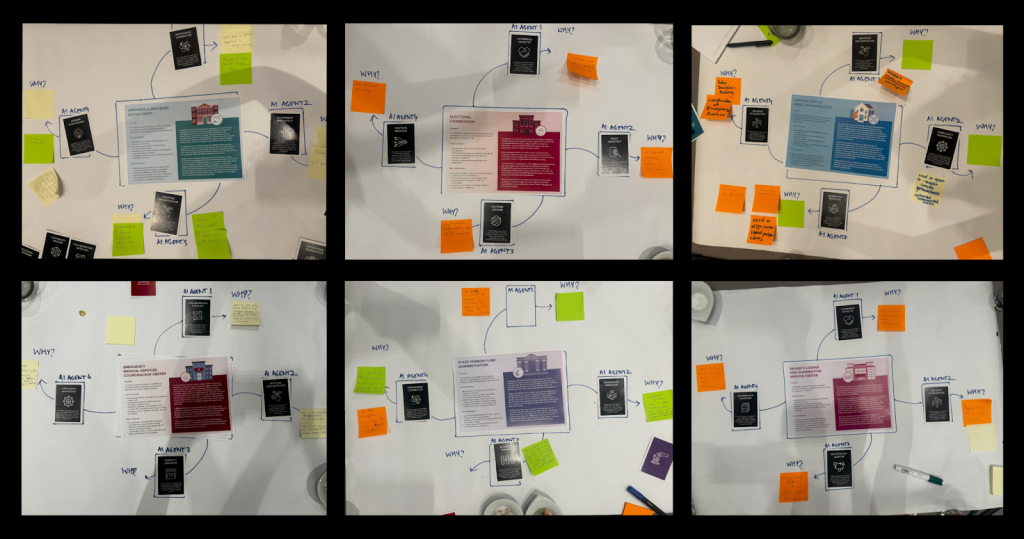

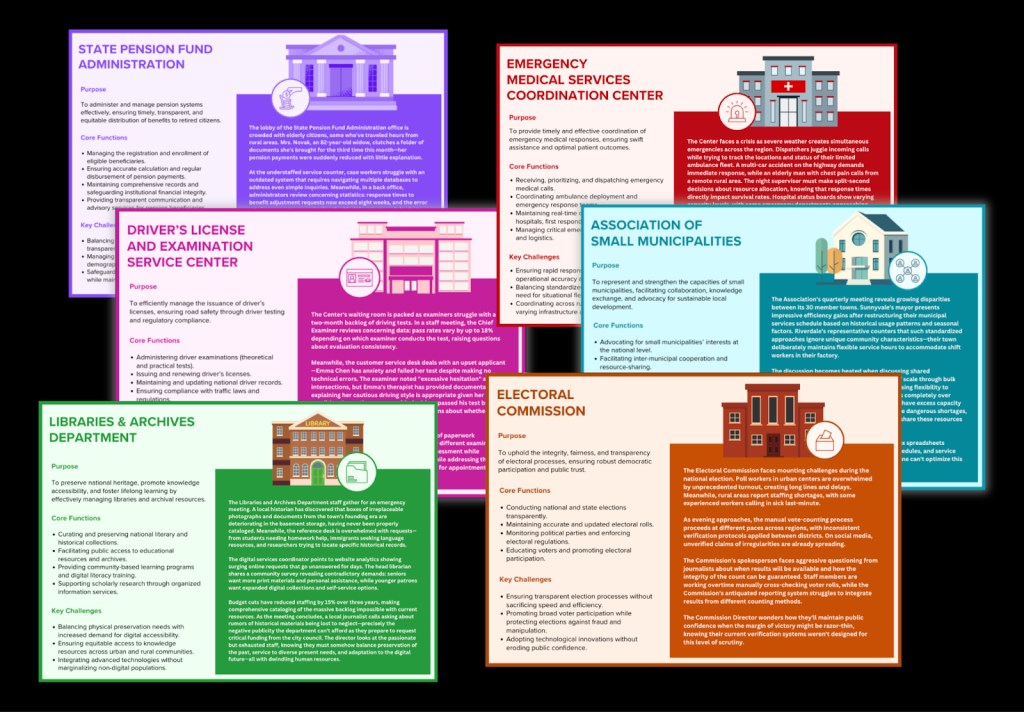

To explore the potential of AI agents for institutional innovation, participants worked with six hypothetical institutional scenarios during the workshop: an Electoral Commission, an Association of Small Municipalities, a Driver’s License Service Center, Libraries and Archives Department, Emergency Medical Services Coordination Center, and a State Pension Fund. Each scenario represented common institutional challenges that transcend national boundaries.

In small groups, workshop participants studied these fictional institutions and mapped out potential futures where AI agents could address specific institutional challenges. This allowed us to explore possibilities without the constraints of existing bureaucratic structures or political sensitivities. The insights that emerged revealed patterns applicable across different institutional contexts:

Every hypothetical institution faced the same core challenge: fragmentation. The fictional Electoral Commission couldn’t connect voter data systems. The Emergency Services scenario involved multiple agencies unable to synthesize information during crises. The Small Municipalities example showed towns duplicating efforts their neighbors had already solved.

AI agents might help with navigating this complexity through what the UK government refers to as “orchestration” and “choreography”. Agents could serve as connectors, translating between department languages, identifying patterns across siloed databases, and surfacing relevant precedents from institutional memory.

Citizens increasingly see institutions as arbitrary black boxes. The institutional scenarios we used in the workshop highlighted how AI agents might help provide consistent levels of service and decision-making through standardized processes that treat every citizen the same while maintaining flexibility for edge cases.

For example, when AI agents might be used to explain why a permit was denied or how a benefit was calculated, it can make the institutional logic behind that decision visible and therefore contestable. This transparency becomes especially critical as institutions face new pressures like misinformation threats. Clear, consistent, explainable decisions might even help rebuild trust in government.

The hypothetical Libraries and Archives Department scenario revealed another universal problem: institutions drowning in their own information. Reports gather dust, precedents are forgotten, and knowledge walks out the door with retiring employees.

AI agents could transform institutions from passive repositories to active knowledge brokers. They wouldn’t just store and retrieve information, but actively synthesize and translate knowledge across contexts. This could become essential for institutions trying to adapt quickly to new challenges by learning from past responses.

These opportunities align well with IID’s focus on building resilient institutions for an uncertain world. But they also raise fundamental challenges that demand careful consideration:

Workshop participants repeatedly voiced a central tension: “AI should be about simplifying our work but not about putting responsibility away.” As institutions delegate complex tasks to AI agents, accountability becomes murky. Who takes responsibility when things go wrong? How do we maintain democratic oversight? To address this governance challenge we need new frameworks for human-AI collaboration with clear boundaries about which decisions require human judgment, audit trails for AI actions, and mechanisms for citizens to contest automated decisions.

The efficiency gains from AI agents are seductive, especially when institutions face pressure to do more with less. But as the UNDP’s 2025 Human Development Report emphasizes, we must go beyond focusing exclusively on AI for automation. AI’s potential is best leveraged to augment human strengths and judgement, rather than replace them. We must guard against creating institutions that only focus on processing speed. Technology should handle routine complexity while humans focus on the exceptions, ethics, and empathy that define meaningful public service.

AI agents could help institutions consider perspectives they currently overlook. Environmental impacts, future generations, or communities without direct representation could all gain a voice through properly trained agents. But who decides which perspectives to include? How do we prevent biases from perpetuating existing inequalities?

As institutions embark on this transformation, several principles emerged from our workshop that align with IID’s broader institutional innovation agenda:

Early signals of institutional innovation are already emerging. Kazakhstan has created a dedicated Ministry for AI, while Albania has appointed an AI-powered assistant, as an official minister for public procurement. These experiments, whether bold or controversial, show that governments are beginning to reimagine institutional structures for the AI age.

The hypothetical institutions in our workshop may have been fictional, but the challenges they face are real and universal. Every government institution will need to grapple with how AI will reshape its capabilities and responsibilities. In doing so, governments should resist the temptation to see AI purely as a cost-cutting tool for greater efficiency through automation and instead, design systems where AI agents augment human judgment, allowing public servants to focus on what cannot be encoded: creativity, empathy, and trust-building.

At UNDP, we’re working with governments to develop AI governance frameworks and ethical guidelines, build digital infrastructure and data systems, strengthen institutional capabilities for AI adoption, and equip public servants with AI skills. This transformation isn’t about building smarter bureaucracies. It’s about creating institutions worthy of the challenges we face.

Read part two of this blog series.

For those interested in exploring these ideas further, the institutional profile cards and AI agent capability cards from our workshop are available here, and the complete workshop presentation deck is available here.

RELATED POSTS

The SDG Blockchain Accelerator has concluded its 2025 virtual global hackathon sprint with 37 winning teams selected to join Cohort 2. Over the next four […]

Following the Istanbul Innovation Days held in March 2025, we are releasing a series of articles capturing key insights, bold ideas and solutions that emerged […]

September 15, 2025 – Istanbul/London — The United Nations Development Programme (UNDP) and the Exponential Science Foundation have announced a partnership to develop the Government […]